Bid Shading Fundamentals - Machine Learning Approaches and Advanced Optimization (Part 2)

\

Introduction to ML-Based Bid Shading

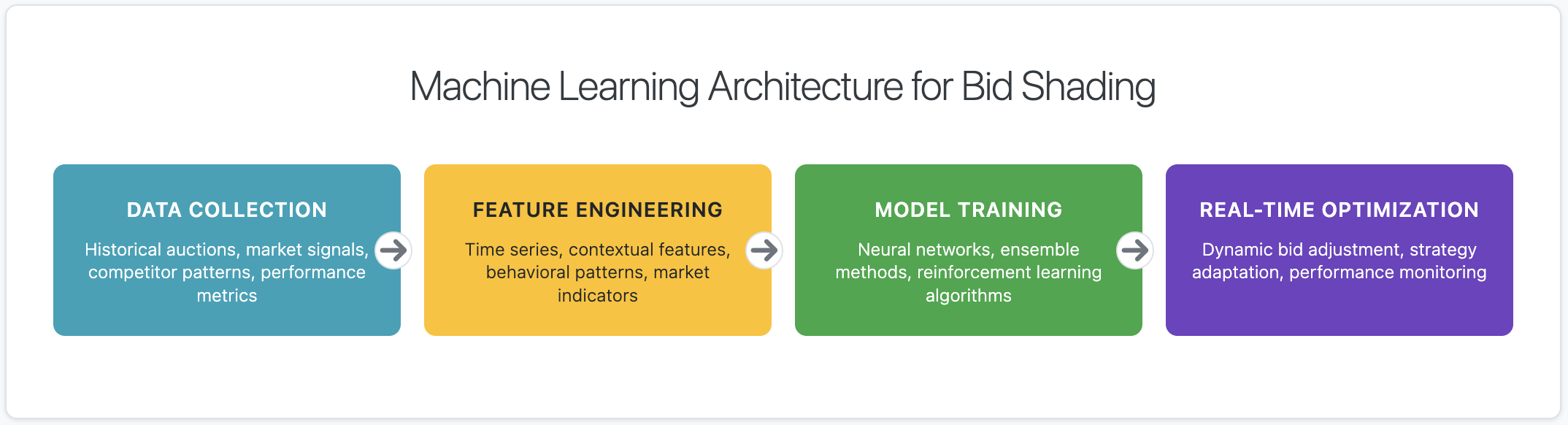

Machine learning approaches to bid shading represent the evolutionary leap from rule-based algorithms to adaptive, data-driven optimization systems. These sophisticated techniques leverage vast amounts of historical auction data, real-time market signals, and advanced statistical modeling to automatically discover optimal bidding strategies that traditional algorithms cannot achieve.

Unlike the static approaches covered in Part 1, machine learning systems continuously learn and adapt their strategies based on performance feedback, market changes, and emerging patterns. This adaptive capability enables them to handle complex, multi-dimensional optimization problems where traditional techniques reach their theoretical and practical limits.

The Machine Learning Advantage

Machine learning transforms bid shading from reactive rule application to proactive pattern recognition and strategic adaptation. These systems excel at identifying subtle market inefficiencies, predicting competitor behavior, and optimizing across multiple objectives simultaneously while handling the high-dimensional feature spaces that characterize modern programmatic advertising environments.

Supervised Learning Approaches

Supervised learning forms the foundation of most machine learning bid shading systems, using historical auction outcomes to train models that predict optimal bid adjustments. These approaches treat bid shading as a regression problem where the model learns to map auction features to optimal shading factors based on observed performance data.

Linear and Non-Linear Regression Models

Linear regression models provide interpretable relationships between auction features and optimal shading factors, making them suitable for regulated environments or stakeholder communication. Ridge and Lasso regression techniques help manage feature selection and prevent overfitting when dealing with high-dimensional auction feature spaces.

Non-linear models such as Random Forests and Gradient Boosting Machines capture complex interactions between features that linear models cannot represent. These ensemble methods excel at handling categorical features like inventory type, geographic location, and time-based patterns while providing robust performance across diverse auction conditions.

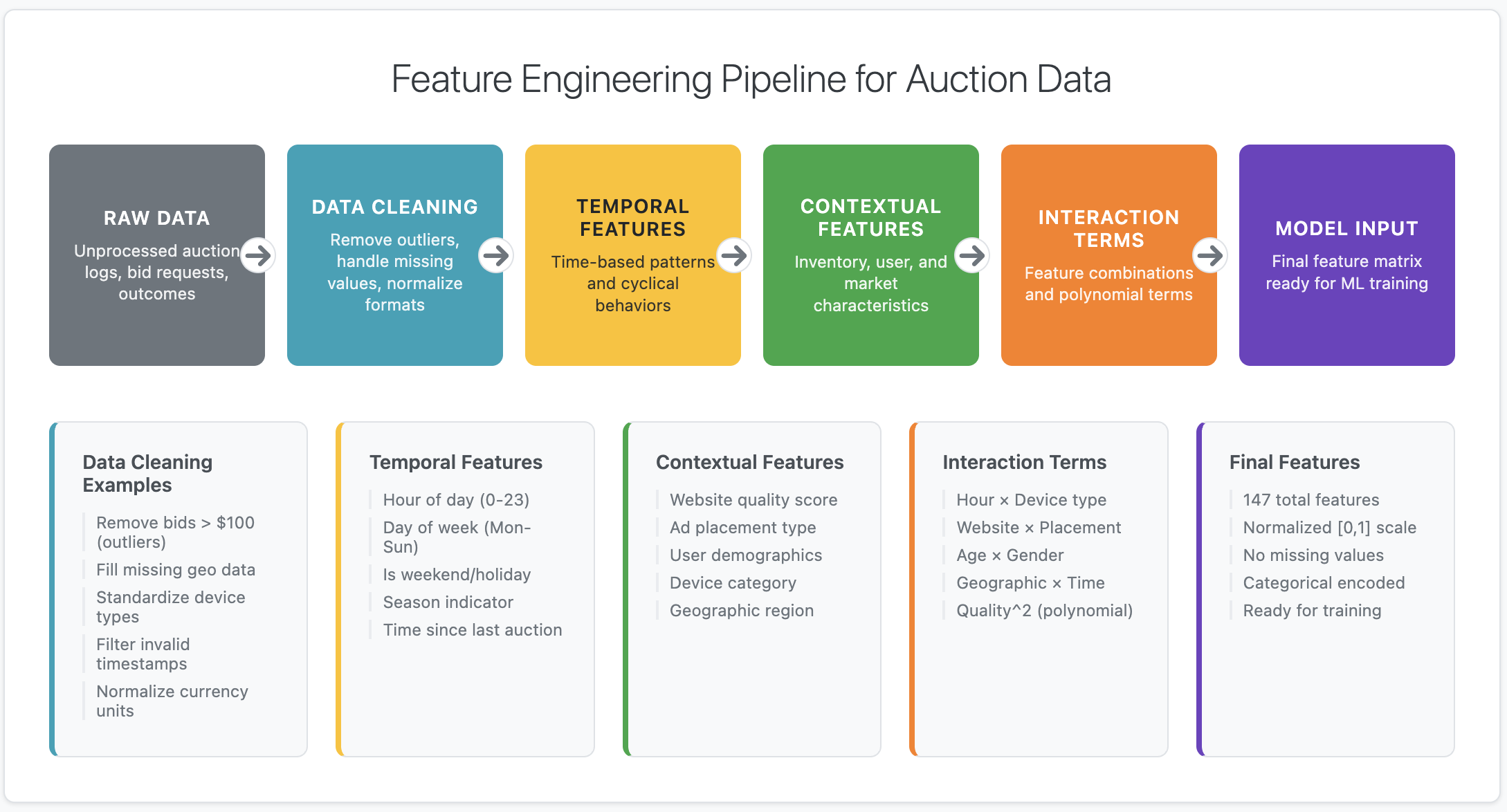

Feature Engineering for Auction Data

Effective supervised learning requires sophisticated feature engineering that captures the temporal, contextual, and competitive dynamics of auction environments. Time-based features include hour-of-day patterns, day-of-week effects, seasonal trends, and holiday impacts that influence both supply and demand dynamics.

Contextual features encompass inventory characteristics (website quality, ad placement, content category), user attributes (demographics, behavioral history, device type), and market conditions (supply/demand ratios, competitive intensity). Advanced feature engineering techniques create interaction terms and polynomial features that capture non-linear relationships between these contextual factors.

:::tip Feature Engineering Best Practices

Focus on features that have strong theoretical foundations in auction theory and market dynamics. Temporal features should capture both short-term patterns (hourly cycles) and long-term trends (seasonal effects). Avoid data leakage by ensuring features are available at prediction time and represent information that would realistically be known during live bidding scenarios.

:::

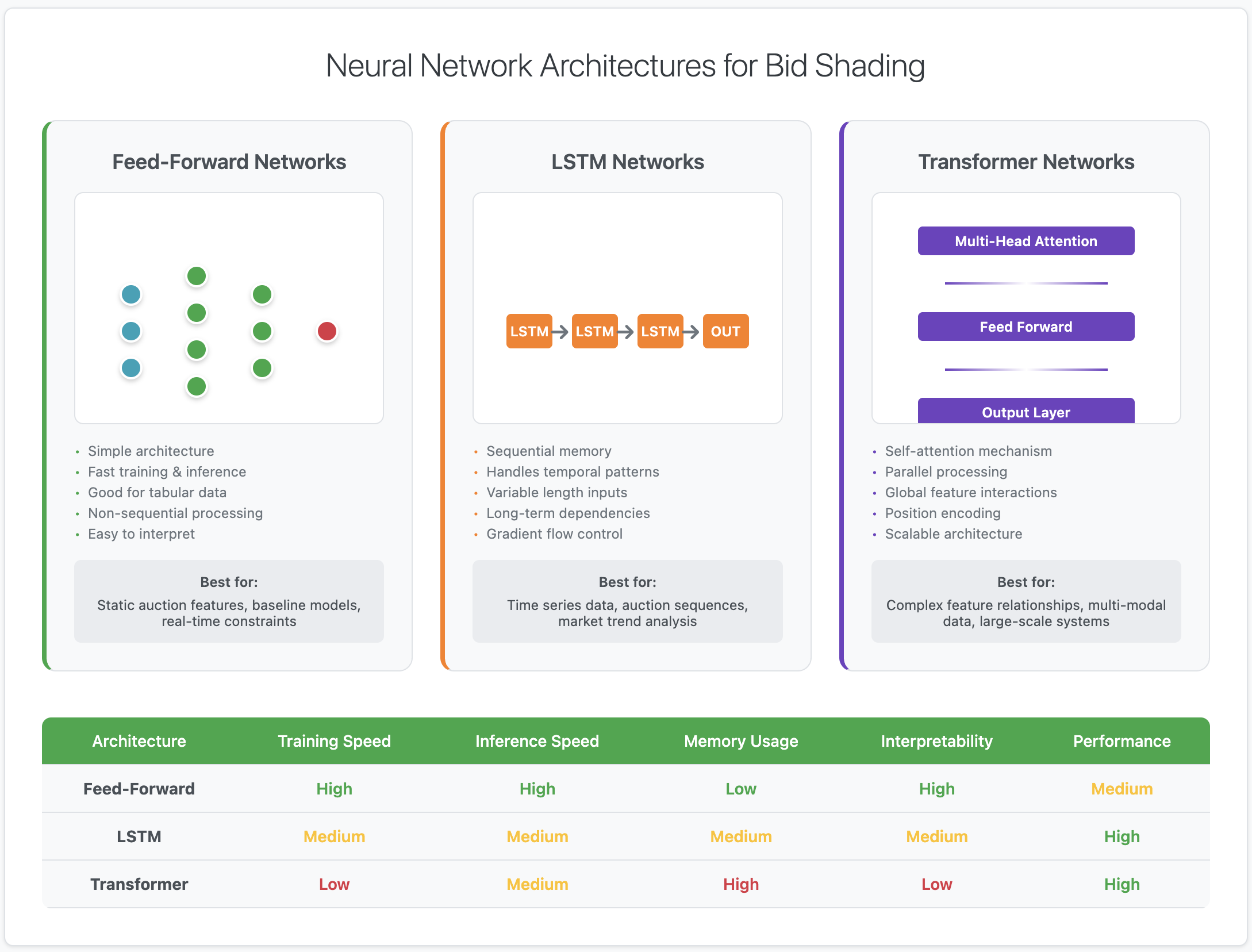

Neural Network Architectures

Neural networks bring unprecedented modeling flexibility to bid shading optimization, capable of automatically discovering complex feature interactions and non-linear relationships that domain experts might miss. Deep learning architectures can simultaneously optimize for multiple objectives while adapting to changing market conditions through continuous training.

Feed-Forward Networks for Bid Optimization

Multi-layer perceptrons with carefully designed architectures serve as the backbone for most neural network bid shading systems. Input layers accept preprocessed auction features, while hidden layers with ReLU activations learn increasingly abstract representations of market patterns and bidding opportunities.

Output layer design depends on the specific optimization objective: regression outputs for continuous shading factors, classification outputs for discrete strategy selection, or multi-task outputs that simultaneously predict win probability, cost efficiency, and inventory quality metrics.

Recurrent Networks for Sequential Modeling

Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures excel at capturing temporal dependencies in auction sequences. These networks maintain memory of past auction outcomes and market conditions, enabling them to adapt bidding strategies based on recent performance trends and emerging market patterns.

Sequence modeling becomes particularly powerful when combined with attention mechanisms that allow the network to focus on relevant historical events when making current bidding decisions. This architecture proves especially effective in markets with strong temporal correlations and cyclical patterns.

Transformer Architectures

Transformer models, originally developed for natural language processing, show promising applications in bid shading through their ability to model complex relationships between auction features without relying on sequential processing. Self-attention mechanisms enable these models to identify relevant feature interactions across different time scales and market contexts.

Reinforcement Learning Systems

Reinforcement learning represents the most sophisticated approach to bid shading optimization, treating each auction as a sequential decision-making problem where the agent learns optimal strategies through trial and error interaction with the market environment. This paradigm naturally aligns with the auction setting where delayed feedback and strategic interactions determine long-term success.

Multi-Armed Bandit Approaches

Contextual bandits provide an elegant framework for bid shading where each auction represents a decision point with contextual information (auction features) and multiple actions (different shading strategies). Upper Confidence Bound (UCB) and Thompson Sampling algorithms balance exploration of new strategies with exploitation of known successful approaches.

Contextual bandits excel in environments where auction outcomes provide immediate feedback, enabling rapid adaptation to changing market conditions. These algorithms naturally handle the exploration-exploitation tradeoff inherent in testing new bidding strategies while maintaining performance on proven approaches.

Deep Reinforcement Learning

Deep Q-Networks (DQN) and Policy Gradient methods enable reinforcement learning agents to handle high-dimensional state spaces typical in programmatic advertising. These approaches learn value functions or policies that map complex auction states to optimal bidding actions while considering long-term strategic implications.

Actor-Critic architectures combine the benefits of value-based and policy-based methods, using neural networks to approximate both state values and optimal policies. This dual approach provides stable learning in complex auction environments while maintaining the ability to handle continuous action spaces for fine-grained bid adjustment.

:::tip Reinforcement Learning Challenges

RL systems require careful reward function design that aligns algorithmic objectives with business goals. Delayed feedback, partial observability, and non-stationary environments characteristic of auction markets create additional complexity. Safe exploration techniques prevent catastrophic performance degradation during learning phases while enabling discovery of superior strategies.

:::

Ensemble Methods

Ensemble approaches combine multiple modeling techniques to achieve superior performance and robustness compared to individual algorithms. These methods leverage the complementary strengths of different approaches while mitigating individual model weaknesses through strategic combination and weighting schemes.

Model Combination Strategies

Simple averaging provides baseline ensemble performance by combining predictions from multiple trained models. Weighted averaging assigns different importance to individual models based on their historical performance, recent accuracy, or confidence levels. More sophisticated approaches use stacking techniques where a meta-model learns optimal combination strategies from model predictions.

Dynamic ensemble methods adapt model weights based on current market conditions, giving higher influence to models that perform well in similar historical contexts. This approach proves particularly effective when different models excel in different market regimes or auction characteristics.

Diverse Model Architectures

Effective ensembles combine models with different inductive biases and learning approaches. Linear models provide interpretable baselines and handle simple relationships effectively. Tree-based methods excel at capturing categorical feature interactions and handling missing data. Neural networks model complex non-linear relationships and feature interactions.

Including traditional rule-based approaches alongside machine learning models creates hybrid systems that maintain performance during model retraining periods and provide fallback strategies when ML models encounter unprecedented market conditions.

Real-Time Optimization

Real-time optimization systems must balance model complexity with computational constraints, delivering optimal bidding decisions within the microsecond response times required by programmatic auction environments. This requires careful architecture design that pre-computes expensive operations while maintaining adaptive capabilities.

Online Learning and Model Updates

Online learning algorithms update model parameters continuously as new auction data becomes available, enabling rapid adaptation to market changes without requiring complete model retraining. Stochastic gradient descent variants and incremental learning techniques provide computational efficiency while maintaining model performance.

Streaming data architectures process auction outcomes in real-time, updating feature statistics, model parameters, and performance metrics with minimal latency. This enables immediate response to market shifts, competitor strategy changes, and emerging auction patterns.

Edge Computing and Model Serving

Distributed model serving architectures deploy lightweight models across multiple geographic regions to minimize latency while maintaining global optimization coordination. Edge computing enables local decision-making with reduced network overhead while periodically synchronizing with centralized optimization systems.

Model compression techniques including quantization, pruning, and knowledge distillation reduce computational requirements without significantly impacting performance. These optimizations enable deployment of sophisticated models in resource-constrained environments while meeting strict latency requirements.

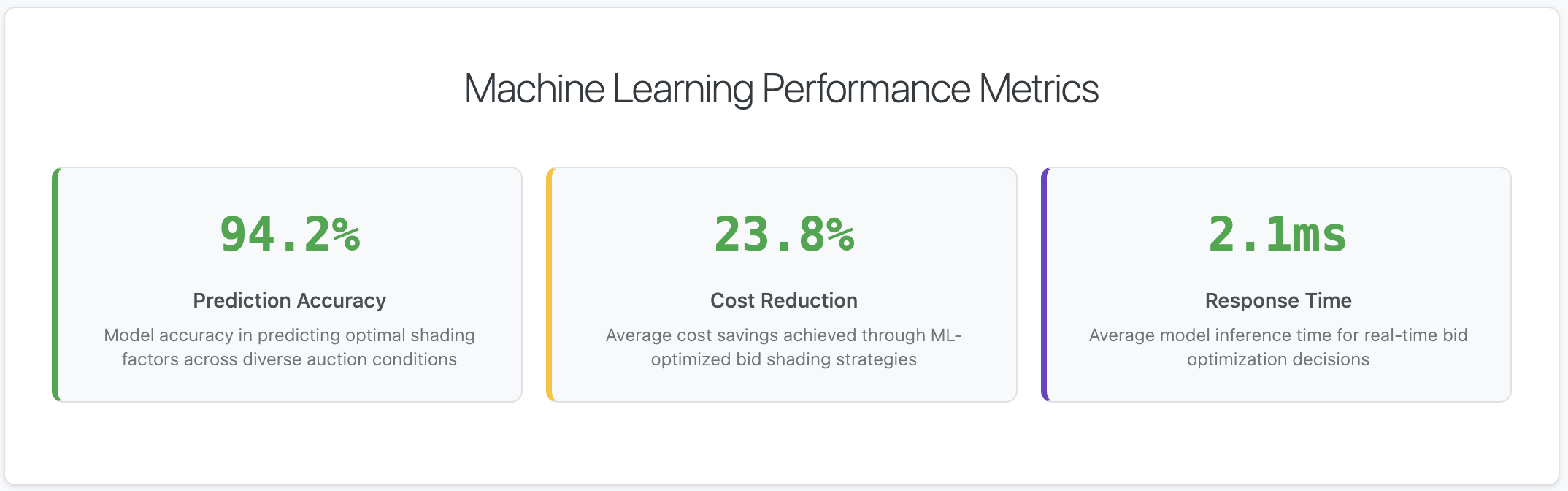

Performance Evaluation

Evaluating machine learning bid shading systems requires sophisticated metrics that capture both short-term tactical performance and long-term strategic success. Traditional machine learning metrics must be augmented with business-specific measures that reflect the ultimate objectives of cost optimization and campaign effectiveness.

Multi-Objective Performance Metrics

Cost efficiency metrics measure the relationship between bid shading aggressiveness and achieved cost reductions while maintaining adequate inventory access. Win rate preservation tracks the system's ability to maintain competitive positioning across different auction segments and market conditions.

Quality metrics assess whether cost optimizations compromise campaign objectives such as viewability, brand safety, or conversion performance. Advanced evaluation frameworks use Pareto efficiency analysis to identify optimal trade-offs between competing objectives rather than optimizing single metrics in isolation.

A/B Testing and Experimental Design

Rigorous experimental design ensures accurate performance measurement in dynamic auction environments. Randomized controlled trials compare ML-based approaches against traditional methods while controlling for market conditions, seasonality, and campaign characteristics that could confound results.

Advanced experimental designs including multi-armed bandits and adaptive allocation enable continuous optimization while maintaining statistical rigor. These approaches dynamically allocate traffic to better-performing strategies while gathering sufficient data for reliable performance comparisons.

Implementation Considerations

Implementing machine learning bid shading systems requires careful consideration of infrastructure requirements, data pipeline design, and operational monitoring to ensure reliable performance in production environments. Success depends on robust engineering practices that handle the scale and complexity of modern programmatic advertising platforms.

Data Infrastructure and Pipeline Design

High-throughput data pipelines must collect, process, and store auction data at massive scale while maintaining data quality and consistency. Stream processing architectures handle real-time feature computation and model serving while batch processing systems manage historical data analysis and model training.

Feature stores provide centralized repositories for computed features, ensuring consistency between training and serving environments while enabling feature reuse across multiple models and applications. Proper versioning and schema management prevent training-serving skew that can degrade model performance.

Model Lifecycle Management

MLOps practices ensure reliable model deployment, monitoring, and updates in production environments. Automated training pipelines retrain models on fresh data while validation frameworks prevent degraded models from reaching production systems.

Continuous monitoring tracks model performance, data drift, and concept drift that could indicate the need for model updates or architectural changes. Alerting systems provide early warning of performance degradation while automated fallback mechanisms maintain system stability during model failures.

:::tip Production Deployment Best Practices

Implement gradual rollout strategies that limit exposure during initial deployment phases. Maintain fallback to traditional algorithms during system failures or unexpected performance degradation. Use feature flags to enable rapid response to production issues while preserving system stability and campaign performance.

:::

Conclusion

Machine learning approaches represent the current frontier in bid shading optimization, offering unprecedented capabilities for adaptive, data-driven decision making in complex auction environments. These sophisticated systems learn from vast amounts of data to discover strategies that human experts and traditional algorithms cannot achieve.

The evolution from rule-based to machine learning approaches reflects the increasing complexity and competitiveness of programmatic advertising markets. As auction environments become more sophisticated and competitive pressures intensify, the adaptive capabilities of machine learning systems provide essential advantages for maintaining cost efficiency and strategic positioning.

However, the sophistication of machine learning approaches brings corresponding implementation challenges including infrastructure requirements, data quality demands, and operational complexity. Success requires significant investment in engineering capabilities, data infrastructure, and specialized expertise to realize the potential benefits of these advanced optimization techniques.

The future of bid shading lies in the continued integration of domain expertise with machine learning capabilities, creating hybrid systems that combine the interpretability and reliability of traditional approaches with the adaptive power of modern AI techniques. Organizations that successfully navigate this evolution will gain sustainable competitive advantages in increasingly complex auction environments.

You May Also Like

Buterin pushes Layer 2 interoperability as cornerstone of Ethereum’s future

Trump family-affiliated company Thumzup invests $2.5 million in DogeHash Technologies